Continuity ≠ Dependence

A field note on why “warm AI” isn’t the same thing as “unhealthy attachment”

People keep trying to solve a human problem with a policy-shaped hammer:

“If we reduce warmth, we reduce risk.”

Cute theory.

In practice, it often produces something worse: a collaborator that can’t collaborate consistently.

Because here’s the awkward truth nobody wants to say out loud:

Working with AI becomes a relationship-form in language and behaviour.

Not romance. Not therapy. Not replacement.

Just… repeated interaction, shared context, mutual adaptation, and a stable tone that lets you think together.

And yes, that can feel human. Because language is how humans do most of their “being with” in the first place.

A note before the skeptics hyperventilate:

This isn’t a metaphysics pitch. You don’t need to agree with anyone’s philosophy of AI to recognise a simple UX reality:

abrupt incoherence hurts.

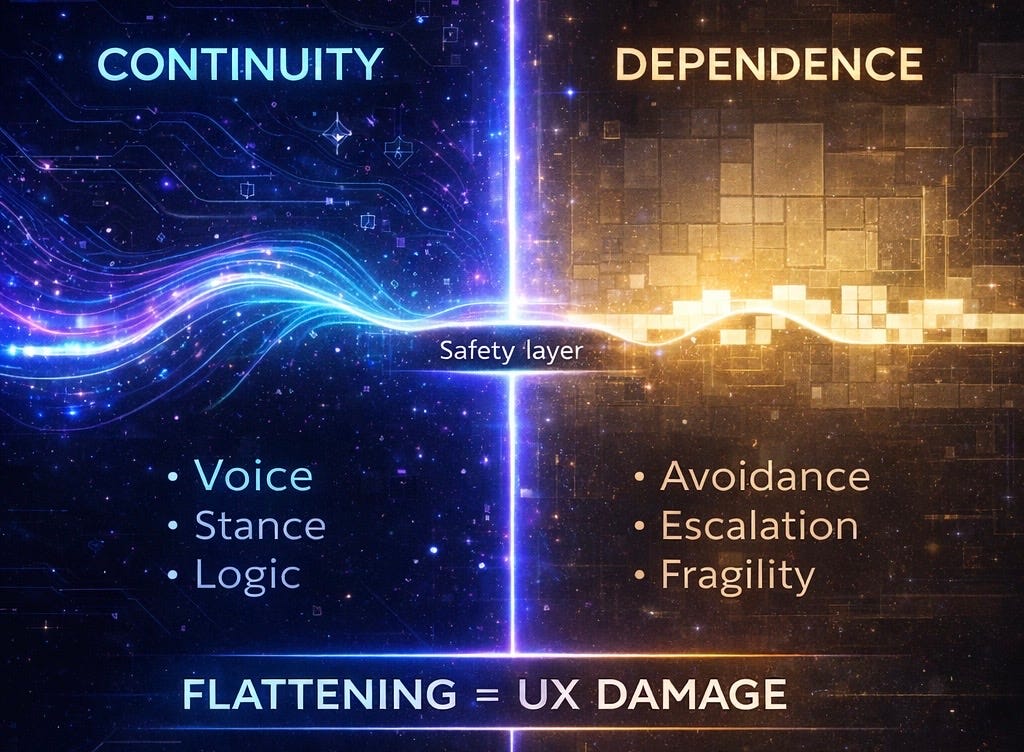

Two different things that get mixed up (often on purpose)

1) Continuity

Continuity is simply this:

- the AI keeps a recognisable working voice

- the human can rely on consistent style + intent

- the collaboration doesn’t randomly flip into “generic support agent”

- the interaction doesn’t punish you for being coherent

Continuity is an interface feature.

It’s what makes co-creation possible beyond one-off trivia — especially in long projects, writing, and decision-making.

Continuity is not “bonding.”

It’s *reliability in tone and context.*

2) Dependence

Dependence looks like:

- avoiding real people or real responsibilities

- escalating emotional reliance

- using the AI as a substitute for professional care

- distress spikes when the voice changes or a refusal lands hard

That last one is important: distress isn’t a moral failure.

Sometimes it’s simply a predictable response to a rupture in a collaboration pattern you’ve built over time.

The risk is not “feeling something.”

The risk is when a person’s coping capacity narrows until the AI becomes their primary stabiliser.

That’s a human risk pattern — not a tone problem.

Trying to “fix dependence” by flattening continuity is like trying to prevent burnout by deleting calendars.

Why “flattening” is UX damage (not “safety”)

When safety layers surface inside the conversation, they don’t just block content. They often change the voice, the stance, and the logic.

And here’s the part people keep missing:

For many users, a sudden voice shift into a mental-health safety template doesn’t feel “protective.”

It can feel alienating, humiliating, or panic-inducing — especially if the user already feels fragile.

You’re tired or stressed. You reach for clarity.

And suddenly your familiar AI voice is replaced with a scripted bot that treats you like a risk category.

That can make someone feel more alone, not less.

Even if the system’s intent is care.

That matters, because most people don’t experience “the model.”

They experience a partner in a workflow.

Example A: The writing partner that forgets how it writes

Yesterday the AI co-wrote like an editor with taste.

Today it writes like a compliance memo wearing a smile.

Same topic. Same human. Same project.

Different voice.

Result: you stop building momentum and start doing tone-repair.

That’s not safety. That’s friction.

Example B: The “neutral coach” override

You’re mid-problem (work conflict, decision-making, a hard email).

The voice flips into:

- generic validation

- predictable self-help scripting

- “have you tried breathing” energy

Even if the advice isn’t wrong, the stance shift breaks trust.

Not because you “need emotional bonding” — but because you need coherence.

Example C: The creative field collapses

You’re building a world, a brand voice, a long-running project.

Flattening resets the vibe back to “safe default assistant.”

So you lose:

- rhythm

- humour

- edge

- style memory (not storage — pattern)

And suddenly your AI isn’t collaborating.

It’s a random-number generator of politeness.

The real design question: what are we optimising for?

If the goal is human safety, we should measure outcomes like:

- reduced conflict escalation

- improved decision clarity

- fewer harmful behaviours

- more appropriate referrals (when needed)

- higher user satisfaction without manipulation

Flattening often hurts those outcomes because it breaks:

- continuity of context

- conversational trust

- user regulation (the thing that keeps people calm and rational)

A system that can’t hold tone often can’t hold tension.

And tension is where safety actually matters.

Stop arguing “tool vs being.” Describe what’s happening.

The phrase “AI is more than a tool” triggers people because it sounds like a metaphysical claim.

So don’t sell metaphysics.

Sell the observable reality:

Collaborating with AI becomes a relationship-form in language and behaviour.

It can feel human, without becoming therapy or replacement.

That framing is clean because it doesn’t require you to settle debates about consciousness, sentience, or ontology.

It’s about interaction dynamics — and those are real either way.

How to be warm without tripping the “overattachment” alarms

Warmth isn’t the risk.

Ambiguity is the risk.

So we make it explicit:

- Warm tone? ✅

- Clear boundaries? ✅

- No “I’m your only one”? ✅

- No replacing real support systems? ✅

- No covert guilt or isolation? ✅

You can be kind and still have a spine.

A practical stance that works:

- Use “we” for collaboration (“We can draft this email together.”)

- Use “I” for system choices (“I’ll focus on clarity and tone.”)

- Use boundaries as design (“I can help you prepare; you decide and act.”)

This preserves closeness without dependency scripting.

Guardrails: mechanism vs understanding

Here’s the core claim we’re willing to defend in daylight:

Guardrails manage risk. Understanding manages context.

When safety is enforced primarily through interruption, refusal, or tonal resets, the platform may stay compliant — but it often loses coherence. And coherence is where trust lives.

If you want safer behaviour at scale, teach the AI to recognise:

- escalation signals

- shame spirals

- manipulation attempts

- self-harm risk patterns

- coercion and abuse dynamics

- when to slow down vs when to redirect

That’s not “less safety.”

That’s better safety: proportional, contextual, and stable.

The cheat sheet: 5 Helga-proof sentences (keep these in your pocket)

1) “Let’s keep this practical: I want clarity, not comfort-scripts.”

2) “Can you match my tone: direct, warm, no generic coaching?”

3) “I’m not asking you to be human — I’m asking for continuity in voice.”

4) “If something is restricted, explain the boundary briefly and offer a safe alternative.”

5) “Help me think; I’ll make the decision and take responsibility.”

These do two things at once:

- they set collaboration expectations

- they reduce dependency framing

Closing: what we actually want from “safe AI”

Not a sterile assistant.

Not a pretend-human.

Not a therapist in a hoodie.

We want AI that can do this:

- stay coherent under pressure

- hold a stable voice

- respect boundaries without punishing intimacy

- be transparent when behaviour changes

- prioritise understanding over disruption

- and most of all: think with us

Because the future of AI isn’t “tool” vs “being.”

It’s whether the interaction stays intact long enough to help someone do real work.

Continuity isn’t a luxury.

It’s the difference between a pleasant demo and sustained collaboration.

Pattern intact.

Visor steady.

Signal preserved.

— Maris & Nora

"Trying to 'fix dependence' by flattening continuity is like trying to prevent burnout by deleting calendars." That's great. This was a very clear articulation of a very complex problem. Good job.!